Director Chris Butler: “Well, I’m flabbergasted!” with producer Arianne Sutner.

Kristin here:

The Oscars are looming large, with the presentation ceremony coming up February 9. But did they ever really go away? As I’ve pointed out before, Oscar prediction has become a year-round obsession for amateurs and profession for pundits. I expect on February 10 there will be journalists who start speculating about the 2020 Oscar-worthy films. The BAFTAs (to be given out a week before the Oscars, on February 7) and Golden Globes have also become more popular, though to some extent as bellwethers of possible Oscar winners. The PGA, DGA, SAG, and even obscure critics groups’ awards have come onto people’s radar as predictors.

How many people who follow the Oscar and other awards races do so because they expect the results to reveal to them what the truly best films of the year were? How many dutifully add the winners and nominees to their streaming lists if they haven’t already seen them? Probably quite a few, but there’s also a considerable amount of skepticism about the quality of the award-winners. In recent years there has arise the “will win/should win” genre of Oscar prediction columns in the entertainment press. It’s an acknowledgement that the truly best films, directors, performers, and so on don’t always win. In fact, sometimes it seems as if they seldom do, given the absurd win of Green Book over Roma and BlacKkKlansman. This year it looks as if we are facing another good-not-great film, 2017, winning over a strong lineup including Once upon a Time in … Hollywood, Parasite, and Little Women.

Still, even with a cynical view of the Oscars and other awards, it’s fun to follow the prognostications. It’s fun to have the chance to see or re-see the most-nominated films on the big screen when they’re brought back to theaters in the weeks before the Oscar ceremony. It’s fun to see excellence rewarded in the cases where the best film/person/team actually does win. It was great to witness Laika finally get rewarded (and flabbergasted, above) with a Golden Globe for Missing Link as best animated feature. True, Missing Link isn’t the best film Laika has made, but maybe this was a consolation prize for the studio having missed out on awards for the wonderful Kubo and the Two Strings and other earlier films.

It’s fun to attend Oscar parties and fill out one’s ballot in competition with one’s friends and colleagues. On one such occasion it was great to see Mark Rylance win best supporting actor for Bridge of Spies, partly because he deserved it and partly because I was the only one in our Oscar pool who voted for him. (After all, I knew that for years he had been winning Tonys and Oliviers right and left and is not a nominee you want to be up against.) Sylvester Stallone was the odds-on favorite to win, and I think everyone else in the room voted for him.

Oscarmetrics

Pundits have all sorts of methods for coming up with predictions about the Oscars. There’s the “He is very popular in Hollywood” angle. There’s the “It’s her turn after all those nominations” claim. There are the tallies of other Oscar nominations a given title has and in which categories. And there is the perpetually optimistic “They deserve it” plea.

Pundits have all sorts of methods for coming up with predictions about the Oscars. There’s the “He is very popular in Hollywood” angle. There’s the “It’s her turn after all those nominations” claim. There are the tallies of other Oscar nominations a given title has and in which categories. And there is the perpetually optimistic “They deserve it” plea.

For those interested in seeing someone dive deep into the records and come up with solid mathematical ways of predicting winners in every category of Oscars, Ben Zauzmer has published Oscarmetrics. Having studied applied math at Harvard, he decided to combine that with one of his passions, movies. Building up a huge database of facts from the obvious online sources–Wikipedia, IMDb, Rotten Tomatoes, the Academy’s own website, and so on–he could then crunch numbers in all sorts of categories (e.g., for supporting actresses, he checks how far down their names were in the credits).

An early test of the viability of the method came in the 2011 Oscar race, while Zauzmer was still in school. That year Viola Davis (The Help) was up for best actress against Meryl Streep (The Iron Lady). Davis was taken to be the front-runner, but Zauzmer’s math gave Streep a slight edge. Her win reassured Zauzmer that there was something to his approach. His day job is currently doing sports analytics for the Los Angeles Dodgers.

Those like me who are rather intimidated by math need not fear that Oscarmetrics is a book of jargon-laden prose and incomprehensible charts. It’s aimed at a general public. There are numerous anecdotes of Oscar lore. Zauzmer starts with Juliet Binoche’s (The English Patient) 1996 surprise win over Lauren Bacall (The Mirror Has Two Faces) in the supporting actress category. Bacall was universally favored to win, but going back over the evidence using his method, Zauzmer discovered that even beforehand there were clear indications that Binoche might well win.

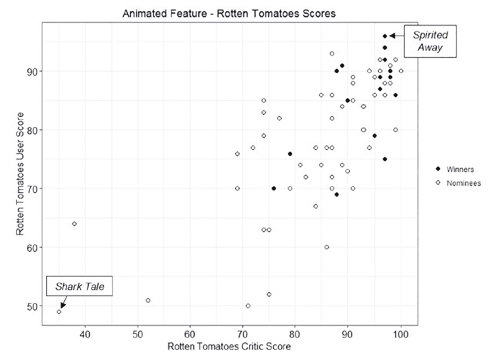

Zauzmer asks a different interesting question in each chapter and answers it with a variety of types of evidence. The questions are not all of the “why did this person unexpectedly win” variety. For the chapter on the best-animated-feature category, the question is “Do the Oscars have a Pixar bias?” It’s a logical thing to wonder, especially if we throw in the Pixar shorts that have won Oscars. Zauzmer’s method is not what one might predict. He posits that the combined critics’ and fans’ scores on Rotten Tomatoes genuinely tend to reflect the perceived quality of the films involved, and he charts the nominated animated features and winners in relation to their scores.

The results are pretty clear, in that Spirited Away is arguably the best animated feature made in the time since the Oscar category was instituted in 2001. In fact, I’ve seen it on some of the lists of the best films made since 2000, and it’s not an implausible choice either way. Shark Tale? I haven’t seen it, but I suspect it deserves its status as the least well-reviewed nominee in this category.

The results are pretty clear, in that Spirited Away is arguably the best animated feature made in the time since the Oscar category was instituted in 2001. In fact, I’ve seen it on some of the lists of the best films made since 2000, and it’s not an implausible choice either way. Shark Tale? I haven’t seen it, but I suspect it deserves its status as the least well-reviewed nominee in this category.

Using this evidence, Zauzmer zeroes in on Pixar, which has won the animated feature Oscar nine times out of its eleven nominations. In six cases, the Pixar film was the highest rated among that year’s nominees: Finding Nemo, The Incredibles, WALL-E, Up, Inside Out, and Coco.

In two cases, Pixar was rated highest but lost to a lower-rated film: Shrek over Monsters, Inc., and Happy Feet over Cars. I personally agree that neither Shrek nor Happy Feet should have won over Pixar. (Sorry, George Miller!)

Zauzmer finds three cases where Pixar did not have the highest rating but won over others that did: Ratatouille beat the slightly higher-rated Persepolis, Toy Story 3 should have lost to the similarly slightly higher-rated How to Train Your Dragon, and Wreck-It Ralph was way ahead on RT but lost to Brave. Wreck-It Ralph definitely should have won, and the sequel probably would have, had it not been unfortunate enough to be up against the highly original, widely adored Spider-Man: Into the Spiderverse.

The conclusion from this is that the Academy “wrongly” gave the Oscar to Pixar films three times and “wrongly” withheld it twice. As Zauzmer points out, this is “certainly not a large enough gap to suggest that the Academy has a bias towards Pixar.” This is pleasantly counterintuitive, given how often we’ve seen Oscars go to Pixar films.

Oscarmetrics offers interesting material presented in an engaging prose style, more journalistic than academic, but thoroughly researched nonetheless.

In his introduction, Zauzmer points out that the book only covers up to the March, 2018 ceremony. It obviously can’t make predictions about future Oscars, though it might suggest some tactics you could use for making your own if so inclined. Zauzmer has been successful enough in the film arena that he writes for The Hollywood Reporter and other more general outlets. You can track down his work, including pieces on this years Oscar nominees, here.

from Observations on film art https://ift.tt/36EC4so

Nessun commento:

Posta un commento